In the previous article, I described how I used SignalR to coordinate clusters of Internet of Things (IoT) devices so that only one device in the cluster could do a specific task at a time. The example was based on a real-life project where IoT devices acted as public announcement systems at a railway station.

If one device on a specific platform was playing an announcement, other devices knew about it and wouldn’t play any audio until the first device was finished. This way, the passengers didn’t have to listen to conflicting announcements playing over each other while trying to figure out what they were saying.

Today, we will go deeper into the architecture of such clusters. In particular, we will look into how to solve another problem, which is very common in the IoT industry.

Project background

Very often, you will find that IoT devices are located in places with weak wireless signal (either Wi-Fi or cell) and/or low internet speed. This was the problem that we faced when we needed to install these devices at railway stations. Many railway stations, especially the ones located in the middle of nowhere in rural areas, have very weak internet speed. There were even some stations I worked at where the entire station, including all of its internet-connected devices, operated off just a single 4G SIM card!

This situation is highly problematic if what you are trying to achieve is real-time public announcements based on the live train movement feed processed by a server in the cloud. However, the good news is that we found a way. We’ve architectured the software in IoT devices in such a way that clusters of IoT devices became self-organizing. We also found a way for them to use as little network bandwidth as possible and still perform the functionality they were built for.

Moreover, the architecture enabled the IoT device clusters to reorganize themselves if any of the devices failed, which, as you may imagine, does occasionally happen with small single-board computers out in the wild. Until a failed device is physically replaced with a new one, which may take days, the system still operates as normal even as it consists of fewer devices.

Technologies chosen

The company I was working for was primarily a .NET house and this is the area where all developers had the most expertise. Luckily for us, this technology was more than sufficient to do what we wanted to do. Because .NET apps could run on any operating system and any CPU architecture, we could build them on a Windows machine running an Intel processor and then deploy them on an IoT device running a lightweight Linux distro on an AMD processor. Moreover, .NET was more than capable of directly interacting with the device hardware, such as via the GPIO interface, which enabled the devices to interact with commercial-grade audio amplifiers.

Another great feature of the .NET ecosystem is that it comes with a lightweight server technology called Kestrel. This technology allows to effortlessly host network-connected apps on any device. This is important for our setup, as each IoT device should have been able to act both as a client and a server, as we shall see shortly.

Finally, to enable real-time interactions between devices, we used SignalR. This technology allows for real-time two-way message exchange between the client and the server. Instead of using a model where the client initiates a request and the server returns a response, a persistent connection is established between the client and the server.

While the connection is active, not only the client can initiate communication. The server can do it too. This is useful in our setup where the server, a cloud-deployed system that was listening to the live train movement data feed, could send a message to a client, an IoT device located at a specific railway station platform, so an upcoming train could be announced.

Let’s now see how these technologies were used to create a self-coordinating system of IoT devices.

Building a self-organizing cluster

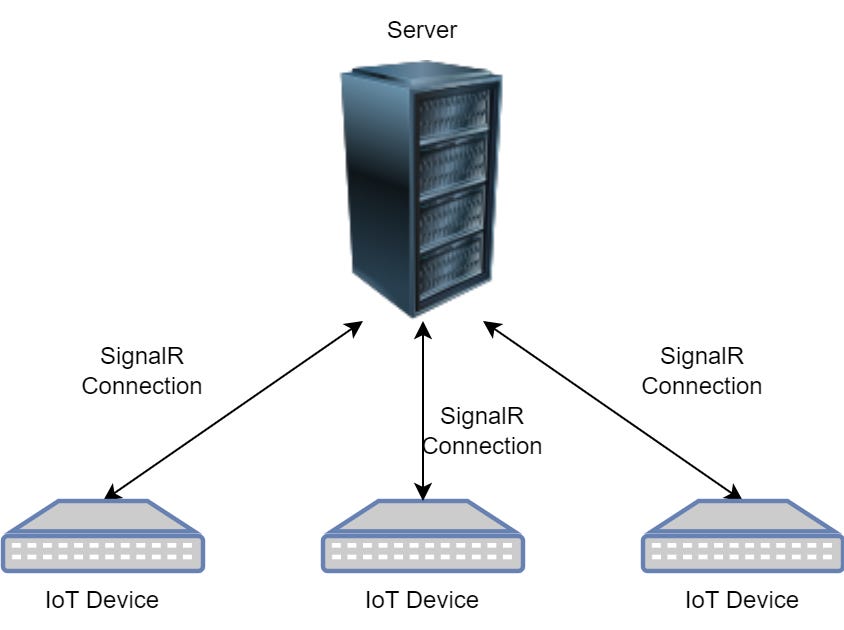

The easiest way to organize a cluster of IoT devices is to connect each device to the server via SignalR, as the following diagram shows:

However, let’s not forget that we need our system to work in an environment with a low network bandwidth. Our goal is to minimize the number of active connections to the outside server. If we have too many such connections, they may take up the entire bandwidth, and some of them may just drop when we need them the most.

Likewise, if we want the server to coordinate the devices, every instruction will have a round-trip between the railway station and the server. The more round-trips there are — the more chances for any individual command to fail.

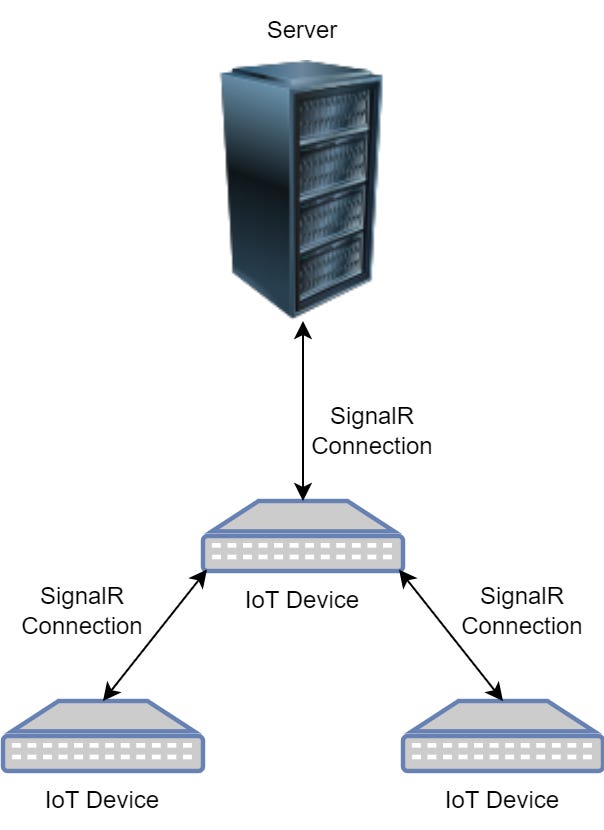

Therefore, the solution was to build a system that can self-coordinate over the local network. The system that we came up with looked like this:

Only one device in the cluster was directly connected to the server. All other devices were connected to the main device, also via SignalR. Remember we talked about a Kestrel server? This is the technology that allowed us to build such a setup relatively easily.

If a server needed to send an instruction to a device that isn’t the main device, it was still straightforward to organize. The instruction would be sent to the main device and the main device would route it to the appropriate device.

The same happened if any device needed to send its status to the server. It could route it via the main device. Finally, if a device needed to inform other devices in the cluster of its actions (e.g. starting to play an audio announcement), it didn’t even need to go to the server at all. It could all be done via the local network inside the cluster.

The process of setting up such clusters was also fully automated. Let’s see how it was done.

Cluster setup

Before the devices were shipped to their location, each device was configured to represent a specific platform at a specific station. The server-based system already knew how many platforms were supposed to be at each station.

When the devices would come online, the following would happen:

- Each device would send the initial HTTP request to the server with some information about itself, such as a local IP address.

- The first device that manages to do this would become the main device.

- Any subsequent device that sends the request would be instructed by the server to establish a SignalR connection to the main device by giving it its IP address.

The server would always know which devices are connected. This information would be stored in a database. Therefore, if the next train is approaching a platform that does not have a device associated with it, the server instructs another device to play the announcement. The device would be selected based on proximity to the platform that has a device missing.

The ability to still make announcements even if any devices were missing or not operational made the systems resilient, which takes us to the next point.

Self-correcting process

So, if we only have one device connected to the server at any point in time, don’t we have a single point of failure? Well, not quite.

SignalR itself can detect whether a connection has been broken. We can even associate event handlers with such an event. However, what we also did is the system of heartbeat messages which makes this process even more reliable.

Heartbeats are small packages of data that are exchanged between the client and the server at regular intervals, such as every 30 seconds. A system can be configured to expect the heartbeats to arrive and react to missing heartbeats. For example, if there was no heartbeat received in 60 seconds, we can assume that there is either something wrong with the network or that the device that was sending the heartbeat has failed.

This is how, if any device in the cluster stops sending the heartbeat, we can assume that it’s offline, arrange its replacement, and route its tasks to another device. But what if this happens to the main device? Well, in this case, we can implement the same process as we applied right at the beginning.

Any randomly selected device from the network can send an HTTP request to the server and nominate itself as the new main device. All other devices can then send HTTP requests to the server to receive the connection instructions. And the system keeps operating as normal.

Wrapping up

Today, you learned how .NET and SignalR can be used to build self-coordinating IoT systems. Contrary to popular belives, software running on IoT devices doesn’t have to be written in complex low-level languages such as C++ and Rust. High-level languages, such as C#, are more than sufficient for many real-life applications, even where you have to deal with low network bandwidth or interact directly with device hardware.

SignalR in particular is one of the main reasons why I love .NET so much. If you are creative, you can solve so many problems with it. It’s, pretty much, a one-stop-shop solution for anything that requires real-time interactivity over a network.

P.S. If you want me to help you improve your software development skills, you can check out my courses and my books. You can also book me for one-on-one mentorship.