Many design patterns were developed with SOLID principles in mind. Therefore it will be hard to learn design patterns unless you know SOLID principles.

But SOLID principles aren’t merely a tool to help you learn design patterns. Every software developer who uses object-oriented programming languages needs to be familiar with SOLID principles and know how to apply them in their daily job. Being familiar with these principles will make you more effective in your job, which will help you to progress in your career. But another thing to remember is that questions about SOLID principles are often asked during technical interviews. So, if you don’t know how to apply them, it may prevent you from getting a job that you want.

For those who aren’t familiar with what these principles are, SOLID is an abbreviation that stands for the following:

- Single responsibility principle

- Open-closed principle

- Liskov substitution principle

- Interface segregation principle

- Dependency inversion principle

Do you need to always use SOLID principles? Maybe not. After all, principles are not laws. There might be some exceptions where your code would actually be more readable or maintainable if you don’t apply them. But to understand whether or not it makes sense not to apply them in any given situation, you first need to understand them. And this is what this part of this book will help you with.

But the important part is that you will struggle to fully understand design patterns unless you understand SOLID principles. SOLID principles are your foundation. Unlike design patterns, they don’t apply to any specific type of software development problems. They are fundamental components of clean code. And this is why we will start with them.

1. Single responsibility principle

We will start by focusing on the first of these principles – single responsibility principle. I will explain its importance and provide some examples of its usage in JavaScript code.

Incidentally, as well as being the first principle in the abbreviation, it is also the one that is the easiest to grasp, the easiest to implement and the easiest to explain. Arguably, it is also the most important principle from the list. So, let’s go ahead and find out what it is and why you, as a developer, absolutely must know it.

The full solution demonstrated in this chapter is available via the following link:

https://github.com/fiodarsazanavets/solid-principles-on-javascript

What is single responsibility principle

Single responsibility principle states that, for every self-contained unit of code (which is, usually, a class), there should be one and only one reason to change. In more simple language, this means that any given class should be responsible for only one specific functionality.

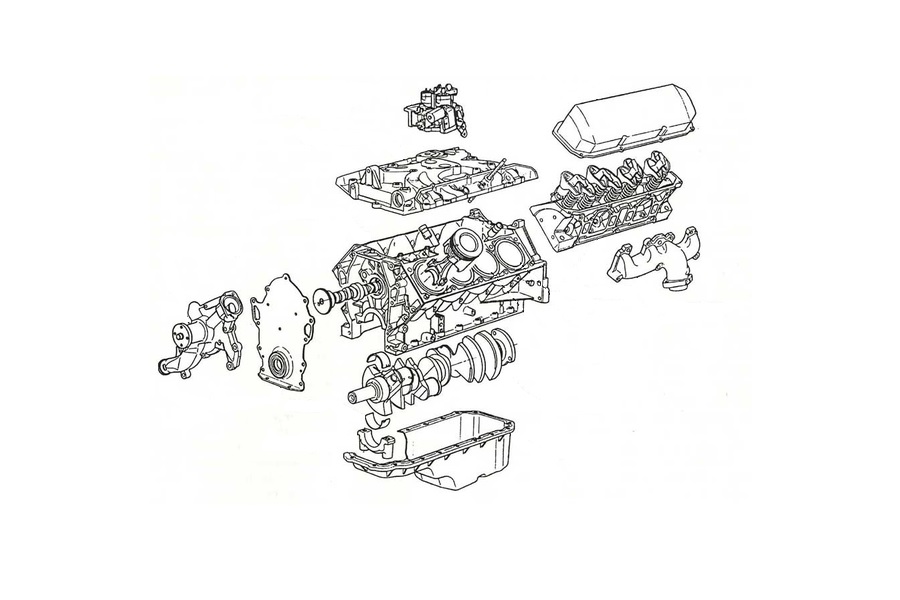

Basically, your code should be structured like a car engine. Even though the whole engine acts as a single unit, it consists of many components, each playing a specific role. A spark plug exists only to ignite the fuel vapors. A cam belt is there only to synchronize the rotation of the crankshaft and the camshafts and so on.

Each of these components is atomic (unsplittable), self-contained and can be easily replaced. So should be each of your classes.

Clean Code, a book that every developer should read, provides an excellent and easy to digest explanation of what single responsibility principle is and provides some examples of it in Java. What I will do now is explain why single responsibility principle is so important by providing some JavaScript examples.

The importance of single responsibility principle

For those who are not familiar with JavaScript, this course will give you a basic introduction. In our example, we have a basic Node.js application with `index.js` file as an entry point.

What we have is a basic console application that will read an input text from any specified text file, will wrap every paragraph in HTML `p` tags and will save the output in a new HTML file in the same folder that the input file came from. If we are to put entire logic into a single file, it would look something similar to this:

const readline = require('readline').createInterface({

input: process.stdin,

output: process.stdout

});

const fs = require('fs');

readline.question('Please specify the file to convert to HTML.', path => {

processFileContent(path);

readline.close();

});

function processFileContent(path) {

var inputText = readAllText(path);

var paragraphs = inputText.split(/[\r\n\?|\n]/);

var textToWrite = '';

for (var i = 0; i < paragraphs.length; i++) {

if (paragraphs[i])

textToWrite += `<p>${paragraphs[i]}</p>\n`;

}

textToWrite += '<br/>';

writeToFile(path, textToWrite);

}

function readAllText(path) {

return fs.readFileSync(path, 'utf8');

}

function writeToFile(path, content) {

var outputPath = path.replace(/\.[^/.]+$/, '') + '.html';

fs.writeFile(outputPath, content, err => {

if (err) {

console.error(err);

}

console.log('HTML file created successfully.');

});

}

This code will work, but it will be relatively difficult to modify. Because everything is in one place, the code will take longer to read than it could have been. Of course, this is just a simple example, but what if you had a real-life console application with way more complicated functionality all in one place?

And it is crucially important that you understand the code before you make any changes to it. Otherwise, you will inadvertently introduce bugs. So, you will, pretty much, have to read the entire class, even if only a tiny subset of it is responsible for a particular functionality that you are interested in. Otherwise, how would you know if there is nothing else in the class that will be affected by your changes?

Imagine another scenario. Two developers are working on the same file, but are making changes to completely different pieces of functionality within it. Once they are ready to merge their changes, there is a merge conflict. And it’s an absolute nightmare to resolve, because each of the developers is only familiar with his own set of changes and isn’t aware how to resolve the conflict with the changes made by another developer.

Single responsibility principle exists precisely to eliminate these kinds of problems. In our example, we can apply the single responsibility principle by splitting our code into separate classes, so as well as having index.js file, we will also have FileProcessor and TextProcessor classes, which we will move into separate JS files inside the same folder (fileProcessor.js and textProcessor.js respectively).

The content of our fileProcessor.js file will be as follows:

const fs = require('fs');

class FileProcessor {

#fullFilePath = '';

readAllText() {

return fs.readFileSync(this.#fullFilePath, 'utf8');

}

writeToFile(content) {

var outputPath = this.#fullFilePath.replace(/\.[^/.]+$/, '') + '.html';

fs.writeFile(outputPath, content, err => {

if (err) {

console.error(err);

}

console.log('HTML file created successfully.');

});

}

constructor(fullFilePath) {

this.#fullFilePath = fullFilePath;

}

}

module.exports = FileProcessor;

#fullFilePath is a private field, so it won’t be accessible outside the class. In JavaScript, the field names that are prefixed with # are automatically made private.

The content of the textProcessor.js file will be as follows:

class TextProcessor {

#fileProcessor = null;

convertText() {

var inputText = this.#fileProcessor.readAllText();

var paragraphs = inputText.split(/[\r\n\?|\n]/);

var textToWrite = '';

for (var i = 0; i < paragraphs.length; i++) {

if (paragraphs[i])

textToWrite += `<p>${paragraphs[i]}</p>\n`;

}

textToWrite += '<br/>';

this.#fileProcessor.writeToFile(textToWrite);

}

constructor(fileProcessor) {

this.#fileProcessor = fileProcessor;

}

}

module.exports = TextProcessor;

And this is what remains of our original index.js file:

const FileProcessor = require('./fileProcessor.js');

const TextProcessor = require('./textProcessor.js');

const readline = require('readline').createInterface({

input: process.stdin,

output: process.stdout

});

readline.question('Please specify the file to convert to HTML.', path => {

var fileProcessor = new FileProcessor(path);

var textProcessor = new TextProcessor(fileProcessor);

textProcessor.convertText();

readline.close();

});

Now, the entire text-processing logic is handled by TextProcessor class, while the FileProcessor class is solely responsible for reading from files and writing into them.

This has made your code way more manageable. First of all, if it’s a specific functionality you would want to change, you will only need to modify the file that is responsible for that specific functionality and nothing else. You won’t even have to know how anything else works inside the app. Secondly, if one developer is changing how the text is converted by the app, while another developer is making changes to how files are processed, their changes will not clash.

While we’ve split text-processing and file-processing capabilities into their own single responsibility classes, we have left the minimal amount of code inside Program class, the application entry point. It is now solely responsible for launching the application, reading the user’s input and calling methods in other classes.

The concept of class cohesion

In our example, it was very clear where the responsibilities should be split. And, in most of the cases, the decision will be based on the same factor we have used – splitting responsibility based on atomic functional areas. In our case, the application was mainly responsible for two things – processing text and managing files; therefore we have two functional areas within it and ended up with a separate class responsible for each of these.

However, there will be situations where a clear-cut functional areas would be difficult to establish. Different functionalities sometimes have very fuzzy boundaries. This is where the concept of class cohesion comes into play to help you decide which classes to split and which ones to leave as they are.

Class cohesion is a measure of how different public components of a given class are inter-related. If all public members are inter-related, then the class has the maximal cohesion, while a class that doesn’t have any inter-related public members has no cohesion. And the best way to determine the degree of cohesion within a class is to check whether all private class-level variables are used by all public members.

If every private class-level variable is used by every public member, then the class is known to have maximal cohesion. This is a very clear indicator that the class is atomic and shouldn’t be split. The exception would be when the class can be refactored in an obvious way and the process of refactoring eliminates some or all of the cohesion within the class.

If every public member inside the class uses at least one of the private class-level variables, while the variables themselves are inter-dependent and are used in combination inside some of the public methods, the class has less cohesion, but would probably still not be in violation of single responsibility principle.

If, however, there are some private class-level variables that are only used by some of the public members, while other private class-level variables are only ever used by a different subset of public members, the class has low cohesion. This is a good indicator that the class should probably be split into two separate classes.

Finally, if every public member is completely independent from any other public member, the class has zero cohesion. This would probably mean that every public method should go into its own separate class.

Let’s have a look at the examples of cohesion above.

In our TextProcessor class, we only have one method, convertText. So, we don’t even have to look at the cohesion. It has maximal cohesion already.

In our FileProcessor class, we have two methods, readAllText and writeToFile. Both of these methods use the #fullFilePath variable, which is initialized in the class constructor. So, the class also has the maximal cohesion and therefore is atomic.

God Object – the opposite of single responsibility

So, you now know what single responsibility principle is and how it benefits you as a developer. What you may be interested to know is that this principle has the opposite, which is known as God Object.

In software development, a method of doing things that is opposite to what best practices prescribe is known as anti-pattern; therefore God Object is a type of an anti-pattern. It is just as important to name bad practices as it is to name good practices. If something has a name, it becomes easy to conceptualize and remember, and it is crucially important for software developers to remember what not to do.

In this case, the name perfectly describes what this object is. As you may have guessed, a God Object is a type of class that is attempting to do everything. Just like God, it is omnipotent, omniscient and omnipresent.

In our example, the first iteration of our code had `index.js` file containing the entire application logic, therefore it was a God Object in the context of our application. But this was just a simplistic example. In a real-life scenario, a God Object may span thousands of lines of code.

So, I don’t care whether you are religious or not. Everyone is entitled to worship any deity in the privacy of their own home. Just make sure you don’t put God into your code. And remember to always use the single responsibility principle.

2. Open-closed principle

Open-closed principle states that every atomic unit of code, such as class, module or function, should be open for extension, but closed for modification. The principle was coined in 1988 by Bertrand Meyer.

Essentially, what it means is that, once written, the unit of code should be unchangeable, unless some errors are detected in it. However, it should also be written in such a way that additional functionality can be attached to it in the future if requirements are changed or expanded. This can be achieved by common features of object oriented programming, such as inheritance and abstraction.

Unlike single responsibility principle which almost everyone agrees with, the open-closed principle has its critics. It’s almost always obvious how the single responsibility principle can be implemented and what benefits it will provide. However, trying to foresee where the requirements may change in the future and designing your classes in such a way that most of them would be met without having to alter your existing code is often seen as an unnecessary overhead that doesn’t provide any benefits.

And the way the software is written has moved on by quite a bit since 1988. If back then the process of deploying a new software version was tedious, long and expensive, many systems of today take minutes to deploy. And the whole process can be done on demand with a click of a mouse.

And with agile software development practices being adopted everywhere in the industry, requirements change all the time. Quite often, the changes are radical. Whole sections of code get removed and replaced on regular bases. So, why design for an adherence to open-close principle if the component that you are writing is quite likely to be ditched very soon?

Although these objections are valid, the open-closed principle still provides its benefits. Here is an example.

Implementing the open-closed principle in JavaScript

So far, so we have refactored our application to ensure that it adheres to single responsibility principle. The application is doing exactly what the requirements say and every element of the application serves its own purpose. But we know that HTML doesn’t just consist of paragraphs, right?

So, while we are only being asked to read paragraphs and apply HTML formatting to them, it’s not difficult to imagine that we may be asked in the future to expand the functionality to be able to produce much richer HTML output.

In this case, we will have no choice but to rewrite our code. And although the impact of these changes in such a small application would be negligible, what if we had to do it to a much larger application?

We would definitely need to rewrite our unit tests that cover the class, which we may not have enough time to do. So, if we had good code coverage to start with, a tight deadline to deliver new requirements may force us to ditch a few unit tests, and therefore increase the risk of accidentally introducing defects.

What if we had existing services calling into our software that aren’t part of the same code repository? What if we don’t even know those exist? Now, some of these may break due to receiving unexpected results and we may not find out about it until it all has been deployed into production.

So, to prevent these things from happening, we can refactor our code as follows.

Our TextProcessor class will become this:

class TextProcessor {

convertText(inputText) {

var paragraphs = inputText.split(/[\r\n\?|\n]/);

var textToWrite = '';

for (var i = 0; i < paragraphs.length; i++) {

if (paragraphs[i])

textToWrite += `<p>${paragraphs[i]}</p>\n`;

}

textToWrite += '<br/>';

return textToWrite;

}

}

module.exports = TextProcessor;

We have now completely separated file-processing logic from it. The main method of the class, convertText, now takes the input text as a parameter and returns the formatted output text. Otherwise, the logic inside of it is the same as it was before. All it does is splits the input text into paragraphs and encloses each one of them in the p tag. And to allow us to expand this functionality if requirements ever change, it was made virtual.

Our index.js file now looks like this:

const FileProcessor = require('./fileProcessor.js');

const TextProcessor = require('./textProcessor.js');

const readline = require('readline').createInterface({

input: process.stdin,

output: process.stdout

});

readline.question('Please specify the file to convert to HTML.', path => {

var fileProcessor = new FileProcessor(path);

var texPtrocessor = new TextProcessor();

var inputText = fileProcessor.readAllText();

var outputText = texPtrocessor.convertText(inputText);

fileProcessor.writeToFile(outputText);

readline.close();

});

We are now calling FileProcessor methods from within this file. But otherwise, the output will be exactly the same.

Now, one day, we are told that our application needs to be able to recognize Markdown (MD) emphasis markers in the text, which include bold, italic and strikethrough. These will be converted into their equivalent HTML markup.

So, in order to do this, all you have to do is add another class that inherits from TextProcessor. We’ll call it MdTextProcessor:

const TextProcessor = require('./textProcessor.js');

class MdTextProcessor extends TextProcessor {

#tagsToReplace = null;

convertText(inputText) {

var processedText = super.convertText(inputText);

for (const [key, value] of Object.entries(this.#tagsToReplace)) {

var replacementTags = key;

if (this.countStringOccurrences(processedText, key) % 2 == 0)

processedText = this.applyTagReplacement(processedText, key, value.opening, value.closing);

}

return processedText;

}

countStringOccurrences(text, pattern) {

var count = 0;

var currentIndex = 0;

while ((currentIndex = text.indexOf(pattern, currentIndex)) != -1) {

currentIndex += pattern.length;

count++;

}

return count;

}

applyTagReplacement(text, inputTag, openingTag, closingTag)

{

var count = 0;

var currentIndex = 0;

while ((currentIndex = text.indexOf(inputTag, currentIndex)) != -1) {

count++;

var replacement = count % 2 != 0 ? openingTag : closingTag;

text = text.replace(inputTag, replacement);

}

return text;

}

constructor(tagsToReplace) {

super();

this.#tagsToReplace = tagsToReplace;

}

}

module.exports = MdTextProcessor;

In its constructor, the class receives a dictionary of tuple-like objects containing two string values. The key in the dictionary is the Markdown emphasis marker, while the value contains the opening HTML tag and closing HTML tag. The code inside the overridden convertText method calls the original convertText method from its superclass and then looks up all instances of each emphasis marker in the text. It then ensures that the number of those is even (otherwise it would be an incorrectly formatted Markdown content) and replaces them with opening and closing HTML tags.

Now, our index.js file will look like this:

const FileProcessor = require('./fileProcessor.js');

const MdTextProcessor = require('./mdTextProcessor.js');

const readline = require('readline').createInterface({

input: process.stdin,

output: process.stdout

});

const tagsToReplace = {

'**': {

opening: '<strong>',

closing: '</strong>'

},

'*': {

opening: '<em>',

closing: '</em>'

},

'~~': {

opening: '<del>',

closing: '</del>'

}

};

readline.question('Please specify the file to convert to HTML.', path => {

var fileProcessor = new FileProcessor(path);

var texPtrocessor = new MdTextProcessor(tagsToReplace);

var inputText = fileProcessor.readAllText();

var outputText = texPtrocessor.convertText(inputText);

fileProcessor.writeToFile(outputText);

readline.close();

});

The dictionary is something we pass into MdTextProcessor from the outside, so we needed to initialize it here. And now our textProcessor variable is of type MdTextProcessor rather than TextProcessor. The rest of the code has remained unchanged.

So, if we had any existing unit tests on the convertText method of the TextProcessor class, they would not be affected at all. Likewise, if any external application uses TextProcessor, it will work just like it did before after our code update. Therefore we have added new capabilities without breaking any of the existing functionality at all.

Also, there is another example of how we can future-proof our code. The requirements of what special text markers our application must recognize may change, so we have turned it into easily changeable data. Now, `MdTextProcessor` doesn’t have to be altered.

Although we could simply use one opening HTML tag as the value in the dictionary and then just create a closing tag on the go by inserting a slash character into it, we have defined opening and closing tags explicitly. Again, what if the requirements state that we need to add various attributes to the opening HTML tags? What if certain key values will correspond to nested tags? It would be difficult to foresee all possible scenarios beforehand, so the easiest thing we could do is make it explicit to cover any of such scenarios.

The benefits of adhering to open-closed principle

Open-closed principle is a useful thing to know, as it will substantially minimize the impact of any changes to your application code. If your software is designed with this principle in mind, then future modifications to any one of your code components will not result in the need to modify any other components and assess the impact on any external applications.

However, unlike the single responsibility principle, which should be followed almost like a law, there are situations where applying the open-closed principle has more cons than pros. For example, when designing a component, you will have to think of any potential changes to the requirements in the future. This, sometimes, is counterproductive, especially when your code is quite likely to be radically restructured at some point.

So, while you still need to spend some time thinking about what new functionality may be added to your new class in the future, use common sense. Considering the most obvious changes is often sufficient.

Also, although adding new public methods to the existing class without modifying the existing methods would, strictly speaking, violate open-closed principle, it will not cause the most common problems that open-closed principle is designed to address. So, in most of the cases, it is completely fine to do so instead of creating even more classes that expand your inheritance hierarchy.

However, in this case, if your code is intended to be used by external software, always make sure that you increment the version of your library. If you don’t, then the updated external software that relies on the new methods will get broken if it accidentally received the old version of the library with the same version number. However, any software development team absolutely must have a versioning strategy in place and most of them do, so this problem is expected to be extremely rare.

3. Liskov substitution principle

Liskov substitution principle was initially introduced by Barbara Liskov, an American computer scientist, in 1987. The principle states that if you substitute a subclass with any of its derived classes, the behavior of the program should not change.

This principle was introduced specifically with inheritance in mind, which is an integral feature of object oriented programming. Inheritance allows you to extend the functionality of classes or modules (depending on what programming language you use). So, if you need a class with some new functionality that is closely related to what you already have in a different class, you can just inherit from the existing class instead of creating a completely new one.

When inheritance is applied, any object oriented programming language will allow you to insert an object that has the derived class as its data type into a variable or parameter that expects an object of the subclass. For example, if you had a super class called Car, you could create another class that inherits from it and is called SportsCar. In this case, an instance of SportsCar is also Car, just like it would have been in real life. Therefore a variable or parameter that is of a type Car would be able to be set to an instance of SportsCar.

And this is where a potential problem arises. There may be a method or a property inside the original Car class that uses some specific behavior and other places in the code have been written to expect that specific behavior from the instances of Car objects. However, inheritance allows those behaviors to be completely overridden.

If the derived class overrides some of the properties and methods of the subclass and modifies their behavior, then passing an instance of the derived class into the places that expect the subclass may cause unintended consequences. And this is exactly the problem that the Liskov substitution principle was designed to address.

Implementing Liskov substitution principle in JavaScript

The application we have developed so far adheres to the open-closed principle quite well, but it doesn’t implement the Liskov substitution principle. And although the overridden convertText makes a call to the original method in the super class and calling this method on the derived class will still process the paragraphs, the method implements some additional logic, which may produce completely unexpected results. I will demonstrate this via a unit test.

So, I have written a test to validate the basic functionality of the original convertText method. In the below example, I have used the jest testing framework, which can be enabled by running npm install --save-dev jest command inside your project folder. I have then replaced the value of the test element in the package.json file with jest.

I have then created __tests__ folder within the root folder of the project and I have placed test.textProcessor.js file inside it. The content of this file has been populated as follows. We initialize an instance of the TextProcessor object in the constructor, pass some arbitrary input text and then check whether the expected output text has been produced.

const TextProcessor = require('../textProcessor.js')

test('Test TextProcessor.convertText', () => {

var originalText = 'This is the first paragraph. It has * and *.\r\n' +

'This is the second paragraph. It has ** and **.';

var expectedSting = '<p>This is the first paragraph. It has * and *.</p>\n' +

'<p>This is the second paragraph. It has ** and **.</p>\n' +

'<br/>';

var textProcessor = new TextProcessor();

expect(textProcessor.convertText(originalText)).toBe(expectedSting);

});

Please note that we have deliberately inserted some symbols into the input text that have a special meaning in Markdown document format. However, TextProcessor on its own is completely agnostic of Markdown, so those symbols are expected to be ignored. The test will therefore happily pass.

As our textProcessor variable is of type TextProcessor, it will happily be set to an instance of MdTextProcessor. So, without modifying our test method in any way or changing the data type of textProcessor variable, we can assign an instance of MdTextProcessor to the variable:

const MdTextProcessor = require('../mdTextProcessor.js')

test('Test TextProcessor.convertText', () => {

var originalText = 'This is the first paragraph. It has * and *.\r\n' +

'This is the second paragraph. It has ** and **.';

var expectedSting = '<p>This is the first paragraph. It has * and *.</p>\n' +

'<p>This is the second paragraph. It has ** and **.</p>\n' +

'<br/>';

var tagsToReplace = {

'**': {

opening: '<strong>',

closing: '</strong>'

},

'*': {

opening: '<em>',

closing: '</em>'

},

'~~': {

opening: '<del>',

closing: '</del>'

}

};

var textProcessor = new MdTextProcessor(tagsToReplace);

expect(textProcessor.convertText(originalText)).toBe(expectedSting);

});

The test will now fail. The output from the convertText method will change, as those Markdown symbols will be converted to HTML tags. And this is exactly how other places in your code may end up behaving differently from how they were intended to behave.

However, there is a very easy way of addressing this issue. If we go back to our MdTextProcessor class and change the override of convertText method into a new method that I called convertMdText without changing any of its content, our test will, once again, pass.

const TextProcessor = require('./textProcessor.js');

class MdTextProcessor extends TextProcessor {

#tagsToReplace = null;

convertMdText(inputText) {

var processedText = super.convertText(inputText);

for (const [key, value] of Object.entries(this.#tagsToReplace)) {

var replacementTags = key;

if (this.countStringOccurrences(processedText, key) % 2 == 0)

processedText = this.applyTagReplacement(processedText, key, value.opening, value.closing);

}

return processedText;

}

countStringOccurrences(text, pattern) {

var count = 0;

var currentIndex = 0;

while ((currentIndex = text.indexOf(pattern, currentIndex)) != -1) {

currentIndex += pattern.length;

count++;

}

return count;

}

applyTagReplacement(text, inputTag, openingTag, closingTag)

{

var count = 0;

var currentIndex = 0;

while ((currentIndex = text.indexOf(inputTag, currentIndex)) != -1) {

count++;

var replacement = count % 2 != 0 ? openingTag : closingTag;

text = text.replace(inputTag, replacement);

}

return text;

}

constructor(tagsToReplace) {

super();

this.#tagsToReplace = tagsToReplace;

}

}

module.exports = MdTextProcessor;

And we still have our code structured in accordance with the single responsibility principle, as the method is purely responsible for converting text and nothing else. And we still have 100% saturation, as the new method fully relies on the existing functionality from the super class, so our inheritance wasn’t pointless.

We are still acting in accordance with the open-closed principle, but we no longer violate the Liskov substitution principle. Every instance of derived class will have the super class functionality inherited, but all of the existing functionality will work exactly like it did in the super class. So, using objects made from derived classes will not break any existing functionality that relies on the super class.

Summary of Liskov substitution principle

Liskov substitution principle is a pattern of coding that will help to prevent unintended functionality from being introduced into the code when you extend existing classes via inheritance.

However, certain language features may give less experienced developers an impression that it’s OK to write code in violation of this principle. For example, virtual keyword in various languages may seem like it’s even encouraging people to ignore this principle. And sure enough, when an abstract method is overridden, nothing will break, as the original method didn’t have any implementation details. But a virtual method would have already had some logic inside of it; therefore overriding it will change the behavior and would probably violate Liskov substitution principle.

The important thing to note, however, is that a principle is not the same as a law. While a law is something that should always be applied, a principle should be applied in the majority of cases. And sometimes there are situations where violating a certain principle makes sense.

Also, overriding virtual methods won’t necessarily violate Liskov substitution principle. The principle will only be violated if the output behavior of the overridden method changes. Otherwise, if the override merely changes the class variables that are used in other methods that are only relevant to the derived class, Liskov substitution principle will not be violated.

So, whenever you need to decide whether or not to override a virtual method, use common sense. If you are confident that none of the components that rely on the super class functionality will be broken, then go ahead and override the method, especially if it seems to be the most convenient thing to do. But try to apply the Liskov substitution principle as much as you can.

4. Interface segregation principle

In object-oriented programming, interfaces are used to define signatures of methods and properties without specifying the exact logic inside of them. Essentially, they act as a contract that a class must adhere to. If class implements any particular interface, it must contain all components defined by the interface as its public members.

Interface segregation principle states that if any particular interface member is not intended to be implemented by any of the classes that implement the interface, it must not be in the interface. It is closely related to single responsibility principle by making sure that only the absolutely essential functionality is covered by the interface and the class that implements it.

And now, to demonstrate interface segregation principle, we will need to add some interfaces to our project and get our classes to implement them. Unfortunately, because JavaScript wasn’t designed to be an object-oriented language from the start, it doesn’t currently have the concept of interfaces. However, it’s still possible to add interface-like functionality to JavaScript codebase. And it still can have its benefits.

Quick recap on interfaces

For those who are new to object-oriented programming in JavaScript, an `interface` represents a structure that has all externally-accessible members of a class (fields, properties, methods, etc.) defined. But, unlike a `class`, an interface doesn’t have any functionality inside any of these member definitions. So, an interface represents a signature of an object, but not a usable object itself.

Interfaces are used to create a family of classes with the same external surface area, but different internal behavior. You can think of it as a contract that a specific class needs to adhere to. A class can then “implement” an interface. And when it does, then all members that have been defined on the interface need to be present in the class with exactly the same signatures (same parameters, same return data types, etc.).

Interfaces are very useful, especially while dealing with strongly-typed languages, like Java, C#, C++, etc.. You can pass an interface as the data type of any given parameter. Then, you can inject an instance of absolutely any class that implements this interface as the parameter. And this then allows you to use context-specific data type, or create a faked/mocked implementation of an external dependency for the purpose of writing unit tests.

Adding interfaces to our project

Even though JavaScript doesn’t natively support the concept of interfaces, there are still ways to use them in the language. The methods of using them are as follows:

- Use a strongly-typed object-oriented superset of JavaScript, like Flow or TypeScript, which has a native support for interfaces.

- Use duck typing.

- Use various NPM libraries to enable interface-like functionality.

- Manually add interface-like behavior.

In the example below, we will apply option 4, as this will require us to make the minimal amount of modifications to our existing code. But in real life projects, I would recommend to use option 1 right from the start.

As our MdTextProcessor has two methods, convertText, which is inherited from TextProcessor and convertMdText, which is it’s own, we can create the following class, which will have the signatures of both of these methods, but will force us to override each of them.

class TextProcessorInterface {

convertText(inputText) {

throw 'This method needs to be overridden.';

}

convertMdText(inputText) {

throw 'This method needs to be overridden.';

}

}

module.exports = TextProcessorInterface;

This, essentially, would be a representation of an interface if we were to rely only on the native language features of JavaScript. It provides signatures for the methods. But if you try to call any of these methods directly, an exception would be thrown.

We can then implement this “interface” by extending the class that represents it. So, our textProcessor.js file will now look like this:

const TextProcessorInterface = require('./textProcessorInterface.js');

class TextProcessor extends TextProcessorInterface {

convertText(inputText) {

var paragraphs = inputText.split(/[\r\n\?|\n]/);

var textToWrite = '';

for (var i = 0; i < paragraphs.length; i++) {

if (paragraphs[i])

textToWrite += `<p>${paragraphs[i]}</p>\n`;

}

textToWrite += '<br/>';

return textToWrite;

}

}

module.exports = TextProcessor;

And, since we then extend TextProcessor class when we declare MdTextProcessor, we will end up overriding both of the methods of TextProcessorInterface. So far so good. However, there is a problem.

Our original TextProcessor class now has two methods. Because it extends TextProcessorInterface, it implicitly inherits convertMdText method from it. And even though the method throws an error when called, it still can be called by any code that uses the original, unextended version of TextProcessor.

But TextProcessor is not meant to know anything about convertMdText method. This method is specific to MdTextProcessor. Therefore this method doesn’t belong in TextProcessor.

To emphasize it further, imagine that you have multiple classes that extend TextProcessor. And each one of them has its own set of additional methods. You don’t know ahead of time what other implementations will exist. And it doesn’t make sense to put all of these additional methods from all of these implementations into the same interface.

And that’s where interface segregation principle comes into play. To adhere to this principle, all you have to do is make sure that the only object members that are defined by the interface are the ones that will be implemented by the object that implements the interface directly. So, if we adhere to this principle, our TextProcessorInterface definition will turn into this:

class TextProcessorInterface {

convertText(inputText) {

throw 'This method needs to be overridden.';

}

}

module.exports = TextProcessorInterface;

And that’s actually enough to make our current solution adhere to interface segregation principle. Because JavaScript is a dynamically-typed language, you don’t really have to create additional interfaces for any classes that extend your original class. To make the separation cleaner, you could, of course, separate the additional methods in any derived classes into mixins, but this would be an overkill in our case.

However, it would be worth it to get your derived classes to implement extended interfaces if you are using a strongly-typed superset of JavaScript, such as TypeScript. In this case, you can either extend your original interface and get your derived class to implement it, or have two separate interfaces and get your derived class to implement them both.

For example, if you were to use TypeScript, your TextProcessorInterface would look like this:

interface TextProcessorInterface {

convertText(inputText: string);

}

The signature of the TextProcessor class would then look like this:

class TextProcessor implements TextProcessorInterface

Then, you may have the following interface definition that would then be implemented by MdTextProcessor:

interface MdTextProcessorInterface extends TextProcessorInterface {

convertMdText(inputText: string);

}

Which you can implement as follows:

class MdTextProcessor implements MdTextProcessorInterface

So, this way, MdTextProcessor class will adhere to TextProcessorInterface and you will be able to use it in any place that expects a parameter of TextProcessorInterface type. But the class also implements some additional functionality, which was defined by an additional interface.

And that concludes our overview of adherence to interface segregation principle in JavaScript. Let’s summarize it.

Summary of interface segregation principle

Interface segregation principle is very similar to single responsibility principle, but it applies to interfaces rather than concrete classes. Interface is nothing more than a contract that defines the structure of your class. And interface segregation principle states that the only things you should define in your contract are the things that you intend to implement in your actual class. For anything else, you need to have a separate contract.

The benefits of using interface segregation principle are the same as those of using single responsibility principle. Your code will only contain what is needed. So your code will be simpler and easier to maintain.

And now we will move to the final of the SOLID principles, which is dependency inversion principle.

5. Dependency inversion principle

Dependency inversion principle states that a higher-level object should never depend on a concrete implementation of a lower-level object. Both should depend on abstractions. But what does it actually mean, you may ask?

Any object oriented language will have a way of specifying a contract to which any concrete class or module should adhere. Usually, this is known as an interface.

Interface is something that defines the signature of all the public members that the class must have, but, unlike a class, an interface doesn’t have any logic inside of those members. It doesn’t even allow you to define a method body to put the logic in.

But as well as being a contract that defines the accessible surface area of a class, an interface can be used as a data type in variables and parameters. When used in such a way, it can accept an instance of absolutely any class that implements the interface.

And this is where dependency inversion comes from. Instead of passing a concrete class into your methods and constructors, you pass the interface that the class in question implements.

The class that accepts an interface as its dependency is a higher level class than the dependency. And passing interface is done because your higher level class doesn’t really care what logic will be executed inside of its dependency if any given method is called on the dependency. All it cares about is that a method with a specific name and signature exists inside the dependency.

Why dependency inversion principle is important

Currently, we have the index.js file that coordinates the entire logic:

const FileProcessor = require('./fileProcessor.js');

const MdTextProcessor = require('./mdTextProcessor.js');

const readline = require('readline').createInterface({

input: process.stdin,

output: process.stdout

});

const tagsToReplace = {

'**': {

opening: '<strong>',

closing: '</strong>'

},

'*': {

opening: '<em>',

closing: '</em>'

},

'~~': {

opening: '<del>',

closing: '</del>'

}

};

readline.question('Please specify the file to convert to HTML.', path => {

var fileProcessor = new FileProcessor(path);

var textProcessor = new MdTextProcessor(tagsToReplace);

var inputText = fileProcessor.readAllText();

var outputText = textProcessor.convertMdText(inputText);

fileProcessor.writeToFile(outputText);

readline.close();

});

Having all the logic inside the index.js file is probably not the best way of doing things. It’s meant to be purely an entry point for the application. Since it’s a console application, providing input from the console and output to it is also acceptable. However, having it to coordinate text conversion logic between separate classes is probably not something we want to do.

So, we have moved our logic into a separate class that coordinates the text conversion process and we called it TextConversionCoordinator. We have placed it into textConversionCoordinator.js file, the content of which has been defined as follows:

const FileProcessor = require('./fileProcessor.js');

const MdTextProcessor = require('./mdTextProcessor.js');

class TextConversionCoordinator {

#textProcessor = null;

#fileProcessor = null;

convertText() {

var status = {

textExtractedFromFile: false,

textConverted: false,

outputFileSaved: false,

error: null

}

var inputText;

try {

inputText = this.#fileProcessor.readAllText();

status.textExtractedFromFile = true;

}

catch (err) {

status.error = err;

return status;

}

var outputText;

try {

outputText = this.#textProcessor.convertMdText(inputText);

if (outputText != inputText)

status.textConverted = true;

}

catch (err) {

status.error = err;

return status;

}

try {

this.#fileProcessor.writeToFile(outputText);

status.outputFileSaved = true;

}

catch (err) {

status.error = err;

return status;

}

return status;

}

constructor(fullFilePath, tagsToReplace) {

this.#fileProcessor = new FileProcessor(fullFilePath);

this.#textProcessor = new MdTextProcessor(tagsToReplace);

}

}

module.exports = TextConversionCoordinator;

And our index.js file becomes this:

const TextConversionCoordinator = require('./textConversionCoordinator.js');

const readline = require('readline').createInterface({

input: process.stdin,

output: process.stdout

});

const tagsToReplace = {

'**': {

opening: '<strong>',

closing: '</strong>'

},

'*': {

opening: '<em>',

closing: '</em>'

},

'~~': {

opening: '<del>',

closing: '</del>'

}

};

readline.question('Please specify the file to convert to HTML.', path => {

var coordinator = new TextConversionCoordinator(path, tagsToReplace);

var status = coordinator.convertText();

console.log(`Text extracted from file: ${status.textExtractedFromFile}`);

console.log(`Text converted: ${status.textConverted}`);

console.log(`Output file saved: ${status.outputFileSaved}`);

if (status.error)

console.log(`The following error occured: ${status.error}`);

readline.close();

});

Currently, this will work, but it’s almost impossible to write unit tests against it. You won’t just be unit-testing the method. TextConversionCoordinator, in its current implementation, instantiates concrete implementations of FileProcessor and MdTextProcessor. So, if you run the convertText method, you will run your entire application logic.

And you depend on a specific file to be actually present in a specific location. Otherwise, you won’t be able to emulate the successful scenario reliably. The folder structure on different environments where your tests run will be different. And you don’t want to ship some test files with your repository and run some environment-specific setup and tear-down scripts. Remember that those output files need to be managed too?

Luckily, your TextConversionCoordinator class doesn’t care where the file comes from. All it cares about is that FileProcessor returns some text that can be then passed to MdTextProcessor. And it doesn’t care how exactly MdTextProcessor does its conversion. All it cares about is that the conversion has happened, so it can set the right values in the status object it’s about to return.

Whenever you are unit-testing a method, there are two things you primarily are concerned about:

- Whether the method produces expected outputs based on known inputs.

- Whether all the expected methods on the dependencies were called.

And both of these can be easily established if, instead of instantiating concrete classes inside our class, we accept abstractions of those as the class constructor parameters.

So, we modify the implementation of the TextConversionCoordinator class as follows:

class TextConversionCoordinator {

#textProcessor = null;

#fileProcessor = null;

convertText() {

var status = {

textExtractedFromFile: false,

textConverted: false,

outputFileSaved: false,

error: null

}

var inputText;

try {

inputText = this.#fileProcessor.readAllText();

status.textExtractedFromFile = true;

}

catch (err) {

status.error = err;

return status;

}

var outputText;

try {

outputText = this.#textProcessor.convertMdText(inputText);

if (outputText != inputText)

status.textConverted = true;

}

catch (err) {

status.error = err;

return status;

}

try {

this.#fileProcessor.writeToFile(outputText);

status.outputFileSaved = true;

}

catch (err) {

status.error = err;

return status;

}

return status;

}

constructor(fileProcessor, textProcessor) {

this.#fileProcessor = fileProcessor;

this.#textProcessor = textProcessor;

}

}

module.exports = TextConversionCoordinator;

And the index.js file will now look as follows:

const FileProcessor = require('./fileProcessor.js');

const MdTextProcessor = require('./mdTextProcessor.js');

const TextConversionCoordinator = require('./textConversionCoordinator.js');

const readline = require('readline').createInterface({

input: process.stdin,

output: process.stdout

});

const tagsToReplace = {

'**': {

opening: '<strong>',

closing: '</strong>'

},

'*': {

opening: '<em>',

closing: '</em>'

},

'~~': {

opening: '<del>',

closing: '</del>'

}

};

readline.question('Please specify the file to convert to HTML.', path => {

var fileProcessor = new FileProcessor(path);

var textProcessor = new MdTextProcessor(tagsToReplace);

var coordinator = new TextConversionCoordinator(fileProcessor, textProcessor);

var status = coordinator.convertText();

console.log(`Text extracted from file: ${status.textExtractedFromFile}`);

console.log(`Text converted: ${status.textConverted}`);

console.log(`Output file saved: ${status.outputFileSaved}`);

if (status.error)

console.log(`The following error occured: ${status.error}`);

readline.close();

});

If you now run the program, it will work in exactly the same way as it did before. However, now we can write unit tests for it very easily.

The interfaces can be mocked up, so the methods on them return expected values. And this will enable us to test the logic of the method in isolation from any other components:

const TextConversionCoordinator = require('../textConversionCoordinator.js')

test('Test TextConversionCoordinator.convertText can process text', () => {

var fileProcessor = {

readAllText() {

return 'input';

},

writeToFile() {

return;

}

};

var textProcessor = {

convertMdText(input) {

return 'altered input';

}

}

var status = new TextConversionCoordinator(fileProcessor, textProcessor).convertText();

expect(status.textExtractedFromFile).toBe(true);

expect(status.textConverted).toBe(true);

expect(status.outputFileSaved).toBe(true);

expect(status.error).toBeNull();

})

test('Test TextConversionCoordinator.convertText can detect unconverted text', () => {

var fileProcessor = {

readAllText() {

return 'input';

},

writeToFile() {

return;

}

};

var textProcessor = {

convertMdText(input) {

return 'input';

}

}

var status = new TextConversionCoordinator(fileProcessor, textProcessor).convertText();

expect(status.textExtractedFromFile).toBe(true);

expect(status.textConverted).toBe(false);

expect(status.outputFileSaved).toBe(true);

expect(status.error).toBeNull();

})

test('Test TextConversionCoordinator.convertText can detect unsuccessful read', () => {

var fileProcessor = {

readAllText() {

throw 'Read error occurred.';

},

writeToFile() {

return;

}

};

var textProcessor = {

convertMdText(input) {

return 'input';

}

}

var status = new TextConversionCoordinator(fileProcessor, textProcessor).convertText();

expect(status.textExtractedFromFile).toBe(false);

expect(status.textConverted).toBe(false);

expect(status.outputFileSaved).toBe(false);

expect(status.error).toBe('Read error occurred.');

})

test('Test TextConversionCoordinator.convertText can detect unsuccessful convert', () => {

var fileProcessor = {

readAllText() {

return 'input';

},

writeToFile() {

return;

}

};

var textProcessor = {

convertMdText(input) {

throw 'Conversion error occurred.';

}

}

var status = new TextConversionCoordinator(fileProcessor, textProcessor).convertText();

expect(status.textExtractedFromFile).toBe(true);

expect(status.textConverted).toBe(false);

expect(status.outputFileSaved).toBe(false);

expect(status.error).toBe('Conversion error occurred.');

})

test('Test TextConversionCoordinator.convertText can detect unsuccessful save', () => {

var fileProcessor = {

readAllText() {

return;

},

writeToFile() {

throw 'Unable to save the file.';

}

};

var textProcessor = {

convertMdText(input) {

return 'altered input';

}

}

var status = new TextConversionCoordinator(fileProcessor, textProcessor).convertText();

expect(status.textExtractedFromFile).toBe(true);

expect(status.textConverted).toBe(true);

expect(status.outputFileSaved).toBe(false);

expect(status.error).toBe('Unable to save the file.');

})

What we are doing here is checking whether the outputs of the convertText method are what we expect them to be based on various outputs from the dependencies that the method calls internally. And all of those dependencies are just mocked to provide us with consistent scenario-specific outputs.

And due to dependency inversion, we were able to write unit tests to literally cover every possible scenario of what TextConversionCoordinator can do.

Dependency inversion and strongly typed languages

In our examples, we have created some arbitrary objects to replace our dependencies. As long as these objects have all the methods that we are calling, the code will work. And we were able to use arbitrary objects as our dependencies because JavaScript is a loosely typed language. Any variable can have any data type assigned to it.

But what if we used a strongly-typed language? Or what if we used a strongly-typed superset of JavaScript, such as TypeScript? How would we adhere to the dependency inversion principle then?

Well, it would still be simple. If the programming language that you are using forces you to specify a data type as your parameter, you can specify an `interface` as your data type. This will then allow you to insert absolutely any `class` that implements this interface. So, you can still mock the functionality rather easily if you want your dependency to produce consistent outputs in unit tests.

Dependency inversion is not only useful in unit tests

Although I have given unit tests as an example of why dependency inversion principle is important, the importance of this principle goes far beyond unit tests.

In our working program, we could pass an implementation of FileProcessorInterface that, instead of reading a file on the disk, reads a file from a web location. After all, TextConversionCoordinator doesn’t care which file the text was extracted from, only that the text was extracted.

So, dependency inversion will add flexibility to our program. If the external logic changes, TextConversionCoordinator will be able to handle it just the same. No changes will need to be applied to this class.

The opposite of the dependency inversion principle is tight coupling. And when this occurs, the flexibility in your program will disappear. This is why tight coupling must be avoided.

Wrapping up

This concludes an overview of how to apply SOLID principles in JavaScript. I hope you found this information helpful and that you now will be able to apply SOLID principles in your own JavaScript projects.

The content of this article originally came from the JavaScript edition of my book called The easiest way to learn design patterns. As well as covering SOLID principles, the book shows how to apply design patterns in JavaScript. And design patterns are a really powerful tool of software developer’s arsenal. Not only they will help you to be efficient at your job and write much more readable and maintainable code, but knowing them will also help you stand out as a candidate applying for a job at a reputable IT companies.

Companies do occasionally ask if you know design patterns. They either ask directly or they give you technical tests that will be much easier to complete if you know design patterns. I saw this in many job interviews and this was one of the things that motivated me to write this book.

I want to help as many developers as possible to be as successful in their career as they can be. This is also why I made this book highly accessible by putting a price on it that is probably a third of what people would normally pay for a technical book. So perhaps you should check it out.

All the best and happy coding!